# Introduction

In this blog post, we will discuss the very basics and the first machine learning algorithm, Linear Regression.

As we discussed in previous posts that there are two types of machine learning algorithms,

1. Regression

2. Classification.

Linear Regression comes under the hood of Regression algorithms.

So what basically a regression is? And what is the meaning of the term "linear"?

Let's understand it with this example:

Download the dataset from the given link: https://github.com/srujaneResearch/creationcodes/tree/master/datasets

```python

import pandas as pd

data = pd.read_csv(r"FuelConsumptionCo2.csv")

data.head(5)

```

|

MODELYEAR |

MAKE |

MODEL |

VEHICLECLASS |

ENGINESIZE |

CYLINDERS |

TRANSMISSION |

FUELTYPE |

FUELCONSUMPTION_CITY |

FUELCONSUMPTION_HWY |

FUELCONSUMPTION_COMB |

FUELCONSUMPTION_COMB_MPG |

CO2EMISSIONS |

| 0 |

2014 |

ACURA |

ILX |

COMPACT |

2.0 |

4 |

AS5 |

Z |

9.9 |

6.7 |

8.5 |

33 |

196 |

| 1 |

2014 |

ACURA |

ILX |

COMPACT |

2.4 |

4 |

M6 |

Z |

11.2 |

7.7 |

9.6 |

29 |

221 |

| 2 |

2014 |

ACURA |

ILX HYBRID |

COMPACT |

1.5 |

4 |

AV7 |

Z |

6.0 |

5.8 |

5.9 |

48 |

136 |

| 3 |

2014 |

ACURA |

MDX 4WD |

SUV - SMALL |

3.5 |

6 |

AS6 |

Z |

12.7 |

9.1 |

11.1 |

25 |

255 |

| 4 |

2014 |

ACURA |

RDX AWD |

SUV - SMALL |

3.5 |

6 |

AS6 |

Z |

12.1 |

8.7 |

10.6 |

27 |

244 |

The above data-set is created to observe the Co2 emission of different car models. The question is, Can we predict the co2 emission of any given car using the engine size with the help of above data-set? Well, using the simple linear regression we can!

In regression, we have two most important variables known as, the dependent variable and the independent variable.

In our case you can observe, the dependent variable is co2 emission because it depends upon the other features in the given data-set.

The independent variables as you can observe is all other features except the co2 emission.

Now, Our algorithm is Simple Linear Regression. So, Let's understand the terms, Simple means that we will be using only one feature as an independent variable.

Linear means that the relationship between the independent variable and the dependent variable will be linear.

So, Let's Select Engine size as an independent variable and co2 emission as the dependent variable.

```python

simpleRegressionData = data[["ENGINESIZE","CO2EMISSIONS"]]

```

```python

simpleRegressionData.head(5)

```

|

ENGINESIZE |

CO2EMISSIONS |

| 0 |

2.0 |

196 |

| 1 |

2.4 |

221 |

| 2 |

1.5 |

136 |

| 3 |

3.5 |

255 |

| 4 |

3.5 |

244 |

Now, Lets clear one thing more, linear Regression Algorithms are of two types:

1. Simple Linear Regression

2. Multiple Linear Regression

In simple linear regression, we have one independent variable and one dependent variable.

In Multiple Linear regression, we have more than one independent variable and only one dependent variable.

For now, we will discuss Simple Linear Regression.

Let's observe if the relationship between these two features are linear or not.

```python

import matplotlib.pyplot as plt

plt.scatter(simpleRegressionData["ENGINESIZE"], simpleRegressionData["CO2EMISSIONS"])

```

You can observe here that the relationship is linear. Both values increases with respect to each other.

# The Best Fit Line

Let's now talk about the algorithm or the logic used in simple linear regression in machine learning.

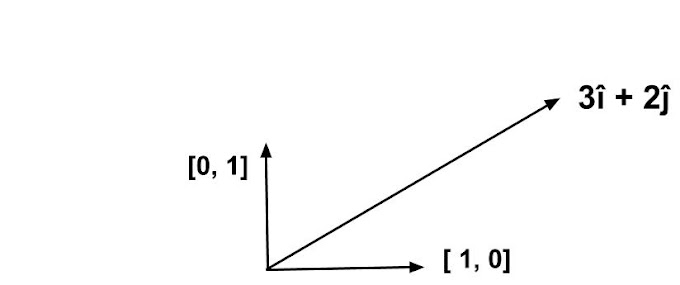

Our main target in the Regression algorithm is to draw a line that can fit in-between the relationship between the dependent and independent variables.

Here our dependent variable is "Co2emissions" and the independent variable is "Engine Size", which means that the "Co2 Emission is a function of "Engine Size".

For our convenient let's assume that the "Co2 emissions" are variable Y, and "Engine Size" is variable X.

Now, we have to find a function that can define the relationship between X, Y.

How we can do that? simple, we know that the relationship between our variables is linear in structure. This means that the function which can define the relationship between X and Y must be or can be an equation of a "Line".

Yes, you heard it right. We again come back to the basics of mathematics! you know that the equation of a line is given by the formula:

Y = mX + C, where m is the slope or the gradient and c is the intercept.

Yeah, we got the formula. But we know only the Y values and the X values! we don't know the, m, c values. That's it, you cracked it. The whole fuss is to find the correct values of m and c only.

There are many different ways by which you can find the slope and the intercept, We Will cover those parts in Mathematics For Machine Learning Course where I will demonstrate you guys with a gradient descent algorithm but for now, let's come back to our topic.

Here, you don't need to know much about the mathematics, Python gives you a pre-built library for linear regression and you just have to call it. But you should know the behind the scenes of this beautiful algorithm for better understanding.

# Residual errors

Let's talk about the best fit line. Till now we understand that the equation of the line Y= mX + c can define the linear relationship of the variables and we have to find the values of m and c.

Now, we can not find the exact values of m and c. For example, Let's say that you find m = 5 and c = 2, Then we have to check whether this value is correct or approximately close or not. Let's check.

```python

simpleRegressionData.head(1)

```

|

ENGINESIZE |

CO2EMISSIONS |

| 0 |

2.0 |

196 |

Let's check for EngineSize = 2.0.

Our values the for, m, c are m=5 and c = 2

Then, Co2 Emissions = EngineSize * m + c

= 2.0 * 4 + 2

= 10

You can observe that the value we got is very far from the actual value! The actual value or the true value is 196.

So, again we need to find some other values of m and c which can result in a close value for the co2 Emission, And this process is called minimizing the error.

We have minimized error, i.e 196- 10 = 186, we have to minimize this value anywhere near to 0.

The error we got using the formula, Actual value - predicted value is known as residual error.

So, ultimately we are reducing the residual error to 0, And from whichever the values for m and c we got 0 is our final values of m,c.

This is why this algorithm is called "Regression". We are repeating the loop until we minimize the error to 0.

Did you notice the beauty of machine learning algorithm, We started from predicting the relationship, then we got the concept of fitting the line to satisfy the relationship and then we are here, finding the true parameters or the values of m, c for that equation by minimizing the error using the formula:

Error = Actual value - predicted values.

There are several cool ways by which we can find the approximate values of m, c, and one such way is gradient descent. I will explain that in my next post, but for now, let's stick to the topic and move on.

# Python Library For Linear Regression

here we will use the pre-built library in python which will automatically do all the above computation and give us the result.

Python gives us a pre-built library known as sklearn which contains all the machine learning algorithms.

Let's use that library and create our model.

```python

from sklearn import linear_model

```

We will create an object of LinearRegression() available in linear_model

```python

regression = linear_model.LinearRegression()

```

Now, Here you have to split your data set into two parts, One part will be used to train the model and the next part will use to test the model.

```python

ary = simpleRegressionData.values #To convert the data-set from DataFrame to an Array

```

```python

ary

```

array([[ 2. , 196. ],

[ 2.4, 221. ],

[ 1.5, 136. ],

...,

[ 3. , 271. ],

[ 3.2, 260. ],

[ 3.2, 294. ]])

So, here I converted my data frame into an array. you have to do this before giving the data-set for further process.

Now, Let's split this data-set as 70% we will use as a training set and other 30% as testing.

```python

ary.shape

```

(1067, 2)

# for Model Training

```python

train_x = ary[:767,0] #in-dependent variable.

train_y = ary[:767,1] # dependent variable.

```

While converting your data-set and splitting it as an input variable and output variable, you should pay attention to the shape.

```python

train_x.shape

```

(767,)

The above shape will give an error! you should represent it as a vector.

```python

train_x = train_x.reshape((-1,1))

train_x.shape # This is the correct shape.

```

(767, 1)

```python

train_y = train_y.reshape((-1,1))

```

# For Model Testing

```python

test_x = ary[758: ,0].reshape((-1,1))

test_y = ary[758: ,1].reshape((-1,1))

```

# Fitting the data-set for model generation

```python

regression.fit(train_x,train_y)

print("The value of m: ", regression.coef_)

print("The value of c: ", regression.intercept_)

```

The value of m: [[39.19769309]]

The value of c: [126.05167201]

Here, the model is created and we got the values of m, c, Let's check if our model works or not!

```python

simpleRegressionData.head(5)

```

|

ENGINESIZE |

CO2EMISSIONS |

| 0 |

2.0 |

196 |

| 1 |

2.4 |

221 |

| 2 |

1.5 |

136 |

| 3 |

3.5 |

255 |

| 4 |

3.5 |

244 |

```python

co2emission_predict = regression.predict([[2.4]]) # for enginesize = 2.4

```

```python

co2emission_predict

```

array([[220.12613543]])

So, you can observe here that the model predicts approximately close!!!

Always remember that there will be some amount of error that will exist in your model. Your model can not be 100% true. If your model is 100% true then we called it as an over-trained model or over-fitting problem.

We can understand this notion of error using the mean squared error formula:

MSE = mean((actual value - predicted value)**2)

or We can also calculate mean absolute error:

MAE = | actual - predicted |

```python

predicted = regression.predict(test_x)

```

```python

print("mean squared error: ", np.mean((test_y - predicted)**2))

print("Absolute mean error: ", np.mean(np.absolute(test_y-predicted)))

```

mean squared error: 720.5231027945638

Absolute mean error: 19.71771163307104

Remember we talked about the best fit line? All this fuss was about that line. So, we can plot that line using the value of m and c we got.

```python

m = regression.coef_

c = regression.intercept_

plt.scatter(ary[:,0],ary[:,1])

plt.scatter(ary[:,0] , m * ary[:,0] + c)

```

So, This is all about Simple Linear Regression. We predicted the line, We predicted the coefficients, yeah there are some errors, but they must exist. Of course not in large amounts but in a small amount. Errors are very important and we can measure the errors by measuring the accuracy of our model.

There are many different accuracy calculation methods. but Python provides us pre-built tools so that we did not need to know the formulas.

# R2 score

R2 score is a method to evaluate the best fit line in regression problems. The best possible r2 score is 1.0 and the worst can be negative. So, let's observe our R2 score.

```python

from sklearn.metrics import r2_score

```

```python

r2_score(test_y,predicted)

```

0.7908979325870634

So, We can say that our model is 79% correct.

Now, we have covered all the necessary and important topics for the Simple Linear Regression Model In Machine Learning.

Keep Following This BLog For further More interesting Machine Learning Concepts.

```python

```

Social Plugin