The most important element in machine learning is Data-Set. Before using any machine algorithm to develop the model we first process our data-set so that we can get the maximum accuracy and minimum error model.

Data Processing involves the following faces:

- DATA-SET PREPROCESSING

- DATA-SET CLEANING

- FEATURE SELECTION

- FEATURE SCALING

FEATURE

In Machine Learning vocabulary, Feature is the columns of the dataset.

DATA-SET PREPROCESSING.

In Machine Learning we work with numeric data. we play with numbers as machine learning is the concept of mathematics.

But the data in a given data-set may or may not be in the form of mathematical quantity.

For example, consider this dataset:

In this dataset, the Species column contains the string values. We can not perform any mathematical operations with such data types!

So, we convert such columns to have numeric values. But we have to be very careful as whatever change we made, it should not change the character of that column.

For example, in the above data-set, I can change the values of the species column as 0 and 1, were 0 represents the Iris-setosa and 1 represents the Iris-Versicolor. In this way, the column still has the categorical values as 0 and 1.

Python gives us a very interesting library for working with the dataset.

you can use the pandas library for Dataset manipulation.

DATA-SET CLEANING.

In the real-world scenario, the data-set is not properly organized. Not organized means that the data-set can contain some missing values, some garbage values or say it can also have some un-wanted features or columns.

In the Dataset cleaning phase, we take care of the missing values, visualize the dataset, understand the relationship between different features or columns of the dataset and Delete unwanted columns or feature to make our dataset more accurate for model generation.

FEATURE SCALING.

Feature scaling is the most important part before implementing any algorithm for model generation.

Let's look at the below dataset :

Look at the values of the square_feet column and price column. square_feet columns have values in the range of 150 - 600 but the price columns have the values range from 6450 - 18450.

There is a huge factor in differences in the values of both columns.

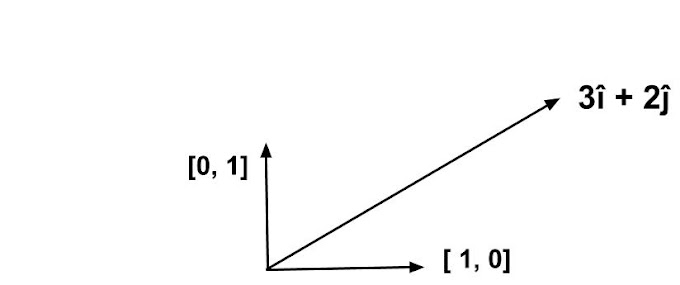

you may think that why is that a problem? See, I told you in previous posts that we treat the columns as the axis and then plot the point to see the variation.

Now suppose I take the points (600, 18450), As you can see they are huge numbers and to plot them I need a very large scale of the coordinate system. Moreover, the mathematical calculations will also become large and complex and time taking.

So, in order to avoid such complexity, we normalize the values and make them small and that is what we mean by feature scaling.

We will use the sklearn. preprocessing library to do so.

We will talk in very detail when we study feature scaling in a separate post.

For now, Just look down to the image to see what happens when we scale our dataset.

As you can observe the values in both the columns are now have a similar range of values.

But, will this change the behavior of the dataset? Let's plot the dataset before scaling and after scaling.

BEFORE SCALING

AFTER SCALING

So, here you can observe that even after scaling the dataset, the nature of the dataset remains unchanged.

We will discuss how this happens and what is the main mathematical concepts behind this wonderful mathematical magic in a separate post.

Social Plugin