# Feature Scaling In Machine Learning.

Before applying any machine learning algorithm, We first need to pre-process our data-set. Pre-processing includes cleaning, dealing with missing values and analyzing the data-set then doing feature scaling.

## So, What is Feature Scaling?

Feature scaling is nothing but a method through which we scale our data-set for proper mathematical calculations.

Let's understand it with an example,

Suppose we have a data-set say,

```python

import pandas as pd

data = pd.read_csv(r"LR_House_price.csv")

data.head(5)

```

|

square_feet |

price |

| 0 |

150 |

6450 |

| 1 |

200 |

7450 |

| 2 |

250 |

8450 |

| 3 |

300 |

9450 |

| 4 |

350 |

11450 |

Observe the values of feature "square_feet" and the values of "price", The values in price is very large than the values of square_feet.

So, if were to do some mathematical calculations or say I want to run gradient descent method to find the best-fit line, I have to do complex and large calculations because of these highly scaled values in each feature.

To avoid such time-consuming calculations we scale our data-set in such a way that each feature has nearly the same values in relation to the corresponding features.

# Python Library For Feature Scaling

Python provides us a library that contains such data-preprocessing tools and we have to just import them to make our life easy.

So, let's import the sklearn.preprocessing library. Don't worry I will explain all the types of feature scaling, But first I wanna show you how this data-set will look after we apply one of the scaling methods known as StandardScaler.

```python

import sklearn.preprocessing as pp

ss = pp.StandardScaler() #load StandardScaler()

scaledData = ss.fit_transform(data) #fit_transform() takes data-set and scales it.

scaledData

```

array([[-1.2377055 , -1.11477282],

[-0.87670806, -0.87091627],

[-0.51571062, -0.62705971],

[-0.15471319, -0.38320316],

[ 0.20628425, 0.10450995],

[ 0.56728169, 1.07993617],

[ 2.01127143, 1.81150584]])

Whenever you scale data, It will return you a numpy array. So you need to again convert it into a DataFrame

```python

scaledData = pd.DataFrame(scaledData, columns=["square_feet","price"])

scaledData

```

|

square_feet |

price |

| 0 |

-1.237705 |

-1.114773 |

| 1 |

-0.876708 |

-0.870916 |

| 2 |

-0.515711 |

-0.627060 |

| 3 |

-0.154713 |

-0.383203 |

| 4 |

0.206284 |

0.104510 |

| 5 |

0.567282 |

1.079936 |

| 6 |

2.011271 |

1.811506 |

Observe the values of each feature, They are now scaled with respect to each other. You can see by a very large factor the values got reduced.

This will help us in the faster and easy calculations when we apply a machine learning algorithm.

Let's check if scaling has changed the properties of the data-set or not.

# Before Scaling

```python

import matplotlib.pyplot as plt

plt.scatter(data["square_feet"],data["price"])

```

# After Scaling

```python

plt.scatter(scaledData["square_feet"],scaledData["price"])

```

You can observe that the trend remains the same.

# Types Of Feature Scaling

## 1. Standard Scaler

Standard Scaler scales your data-set in a way that distribution of your data-set will be centered around 0 with a standard deviation of 1.

The Formula for standard Scaling is xi - mean(xi) / stdev(x)

let's check if it is true or not!

## mean before scaling

```python

data.mean()

```

square_feet 321.428571

price 11021.428571

dtype: float64

## mean after scaling

```python

scaledData.mean()

```

square_feet -1.903239e-16

price 1.586033e-16

dtype: float64

So, You can observe that the mean shifts to 0 after StanderedScaling

# stdev before scaling

```python

data.std()

```

square_feet 149.602648

price 4429.339411

dtype: float64

## stdev after scaling

```python

scaledData.std()

```

square_feet 1.080123

price 1.080123

dtype: float64

See after StanderdScaling the deviation comes to 1.

### One point to note that standard scaler method is only good when the data-set has a normal distribution.

For skewed distribution, we can go for other scaling methods..

## 2. MinMax Scaler

Minmax scaler is the method in which the dataset revolves around 0 and 1 or -1 to 1 if there are any negative values.

This scaler works better for cases in which the standard scaler might not work so well. If the distribution is not Gaussian or the standard deviation is very small, the min-max scaler works better.

The Formula for minmax scaler is--> xi - min(x) / max(x) - min(x)

This method is not recommended if your dataset has outliers.

Let's observe min-max in action.

First, I will show you how our dataset looks in kdeplot. Just observe the figure if you don't know what kdeplot is.

```python

import seaborn as sea

```

```python

sea.kdeplot(data['square_feet'])

sea.kdeplot(data['price'])

```

Now, Let's apply MinMax Scaler.

```python

minmax = pp.MinMaxScaler()

mmScale = minmax.fit_transform(data)

```

```python

mmScale

```

array([[0. , 0. ],

[0.11111111, 0.08333333],

[0.22222222, 0.16666667],

[0.33333333, 0.25 ],

[0.44444444, 0.41666667],

[0.55555556, 0.75 ],

[1. , 1. ]])

Observe, In Minmax Scaling the data values or points are shifted around 0 and 1.

Let's Observe how it looks in kdeplot.

```python

sea.kdeplot(mmScale[:,0])

sea.kdeplot(mmScale[:,1])

```

One thing more you should observe that the starting and ending values of each feature are almost the same.

Let's Observe if it changes the trend of our dataset or not!!

```python

plt.scatter(mmScale[:,0], mmScale[:,1])

```

Cool, Our data-set still shows the same trend.

# 3. Robust Scaler

Robust scaling is used when the data-set has some outliers. It is almost similar to min-max scaler but unlike the min0max scaler, It uses inter-quartile range or say, the range of our data-set in its formula.

xi - Q1(x) / Q3(x)-Q2(x)

To demonstrate Robust Scaler I will create a dummy dataset using numpy

```python

import numpy as np

dummyData = pd.DataFrame({

# Distribution with lower outliers

'x1': np.concatenate([np.random.normal(10, 1, 1000), np.random.normal(1, 1, 25)]),

# Distribution with higher outliers

'x2': np.concatenate([np.random.normal(15, 1, 1000), np.random.normal(50, 1, 25)]),

})

```

```python

sea.kdeplot(dummyData["x1"])

sea.kdeplot(dummyData["x2"])

```

```python

rs = pp.RobustScaler()

rscaledData = rs.fit_transform(dummyData)

rscaledData

```

array([[-0.28695671, -0.43497726],

[-1.57556592, -0.98123282],

[ 0.55617446, -0.03027722],

...,

[-6.08243683, 24.73749711],

[-5.4203302 , 25.81466066],

[-5.94025948, 26.08934034]])

```python

sea.kdeplot(rscaledData[:,0])

sea.kdeplot(rscaledData[:,1])

```

# 4. Normalizer

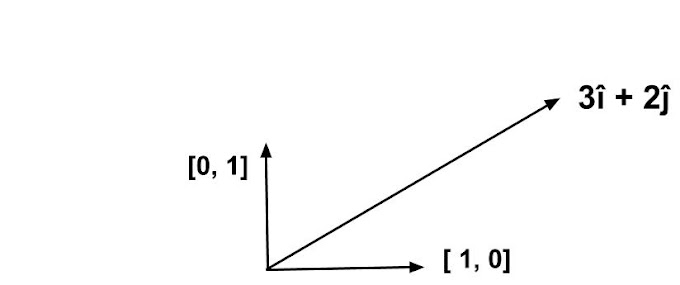

Now, This scaling is very interesting and drives you through linear algebra. In this scaling, data-point are scaled in such a way that they will be at a distance of 1 unit from the origin.

Let's understand it by an example.

I will again use numpy to create a dummyset

```python

data = pd.DataFrame({

'x1': np.random.randint(-100, 100, 1000).astype(float),

'y1': np.random.randint(-80, 80, 1000).astype(float),

'z1': np.random.randint(-150, 150, 1000).astype(float),

})

```

```python

data

```

|

x1 |

y1 |

z1 |

| 0 |

92.0 |

0.0 |

123.0 |

| 1 |

76.0 |

44.0 |

132.0 |

| 2 |

-37.0 |

76.0 |

-148.0 |

| 3 |

-1.0 |

-77.0 |

131.0 |

| 4 |

-29.0 |

28.0 |

6.0 |

| ... |

... |

... |

... |

| 995 |

-6.0 |

-7.0 |

127.0 |

| 996 |

74.0 |

-50.0 |

21.0 |

| 997 |

-94.0 |

-24.0 |

6.0 |

| 998 |

-31.0 |

-1.0 |

-86.0 |

| 999 |

62.0 |

7.0 |

69.0 |

Let's first talk about the linear algebra side.

You can observe that our data-set has 3 features. Now, we consider these 3 features namely x1, x2, x3 as three different dimensions then, we can plot a point say (-39.0, -11.0, -105.0) in a coordinate system.

```python

from mpl_toolkits.mplot3d import Axes3D

fig = plt.figure()

ax = fig.add_subplot(111, projection='3d')

ax.scatter(data['x1'],data['y1'],data['z1'])

```

Now, Let's scale our data-set using normalizer method

```python

normalizer = pp.Normalizer()

scaledData = normalizer.fit_transform(data)

scaledData

```

array([[ 0.59895783, 0. , 0.80078057],

[ 0.4793641 , 0.27752659, 0.83257976],

[-0.21708816, 0.44591081, -0.86835263],

...,

[-0.96707 , -0.24691149, 0.06172787],

[-0.33908651, -0.01093827, -0.9406916 ],

[ 0.66647405, 0.07524707, 0.74172112]])

Now, let's plot our data-set

```python

fig = plt.figure()

ax = fig.add_subplot(111, projection='3d')

ax.scatter(scaledData[:,0],scaledData[:,1],scaledData[:,2])

```

Strange! What happens here is every point in our data-set is now at unit 1 distance from the axis. Due to this, The shape of the data-set becomes a sphere that indicates that every point is at a unit and equal distance.

The normalizer scales each value by dividing each value by its magnitude in n-dimensional space for n number of features.

I hope that by now you know everything about feature scaling.

```python

```

Social Plugin