When we talk about Regression Algorithm In Machine Learning, It can be of two types:

1. Linear Regression

2. Non-Linear Regression

We know that in Linear Regression, We have the concept of the dependent variable and the independent variable and the relationship between those variables is linear.

Again Linear Regression Algorithm has It's two subtypes based on the number of independent variables we can use.

1. Simple Linear Regression.

2. Multiple Linear Regression.

In simple linear Regression, We have one independent variable and one dependent variable, we have discussed them in details, If you are not familiar with Simple Linear Regression then read the given page: https://www.creationcodes.org/2020/02/Learn-Linear-Regression-In-Machine-Learning.html

Here, we will discuss the Multiple Linear Algorithm Used In Machine Learning.

Multiple Linear Regression

In multiple linear regression, we have more than one independent variable, but only one dependent variable.

Let's understand this with the same data-set we used in a simple regression topic.

Now, here the question is to predict the co2 emission of a given car using the above data-set.

While doing or applying any Regression Algorithm, First we need to identify our dependent and independent variables.

In our case

Dependent variables: Co2 emission

Independent variables:

import pandas as pd data = pd.read_csv(r"FuelConsumptionCo2.csv") data.head(5)

| MODELYEAR | MAKE | MODEL | VEHICLECLASS | ENGINESIZE | CYLINDERS | TRANSMISSION | FUELTYPE | FUELCONSUMPTION_CITY | FUELCONSUMPTION_HWY | FUELCONSUMPTION_COMB | FUELCONSUMPTION_COMB_MPG | CO2EMISSIONS | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2014 | ACURA | ILX | COMPACT | 2.0 | 4 | AS5 | Z | 9.9 | 6.7 | 8.5 | 33 | 196 |

| 1 | 2014 | ACURA | ILX | COMPACT | 2.4 | 4 | M6 | Z | 11.2 | 7.7 | 9.6 | 29 | 221 |

| 2 | 2014 | ACURA | ILX HYBRID | COMPACT | 1.5 | 4 | AV7 | Z | 6.0 | 5.8 | 5.9 | 48 | 136 |

| 3 | 2014 | ACURA | MDX 4WD | SUV - SMALL | 3.5 | 6 | AS6 | Z | 12.7 | 9.1 | 11.1 | 25 | 255 |

| 4 | 2014 | ACURA | RDX AWD | SUV - SMALL | 3.5 | 6 | AS6 | Z | 12.1 | 8.7 | 10.6 | 27 | 244 |

- MODELYEAR

- MAKE MODEL

- VEHICLECLASS

- ENGINESIZE

- CYLINDERS

- TRANSMISSION

- FUEL TYPE

- FUELCONSUMPTION_CITY

- FUELCONSUMPTION_COMB

- FUELCONSUMPTION_COMB_MPG

In our case, we have 11 independent variables.

Should we use all of them to determine the co2 emission?

No, We can not use all the independent variables because in multiple linear regression you can observe the term "linear".

Yes, use guessed it right!

We will choose only those independent variable which has a linear relationship with co2emission.

How we can know if they have a linear relationship or not?

By plotting the scatter plot. just plot the scatter plot and select the features that have a linear relationship.

Now, In simple linear regression, you know that we talked about the best fit line. We said that the model must be an equation of a line to predict the co2 emission.

There we have formula as Y = X*m + c, where m, c, are the parameter that we need to find.

But, In multiple Linear Regression, we have more than one independent variable.

Then what will be the best fit line?

The Best Fit Plane

Yes, you read the heading right. Here we will find the plane. Because we have more than one independent variable.

Then, what will be our formula?

The formula for the model is also very neat as : Y = X1*M1 + X2*M2 + x3*M3 + ..... + Xn*Mn + C.

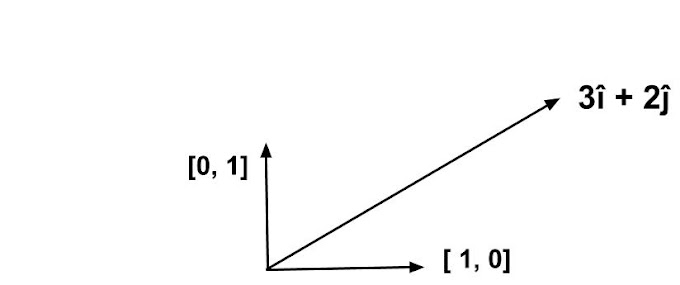

We can write this formula in terms of vector as this will make our work and computation easy.

I can write, [1,X1, X2, X3, X4, ....., Xn] . [M1, M2, M3, M4, M5...., Mn].T

The above notation is just a vector dot product between independent variables written as X1, X2... and Transpose of the Weights or the parameters written as M1, M2, M3.....

Note the 1 in independent vector, that one is for the "C" in our formula, We represent the C as one of the values in [M1, M2, M3, ....].

I will demonstrate the above vector notion with an example,

import numpy as np x1 = 3 x2 = 5 x3 = 7 m1 = 2 # or you can say it as c m2 = 3 m3 =4 m4 = 5 X = np.array([1,x1,x2,x3]) # creating vector X M = np.array([m1,m2,m3,m4]).T # note I have done the transpose of M Formula = X@M # same as m1 + m2*x1 + m3*x2 + m4*x3 FormulaOutPut

66So, Let's come back to our topic.

In multiple linear regression, we find a best-fit plane.

The concept is the same, You have to find the parameters m1, m2, m3... such that the residual error is less.

Residual Error

Residual Error is calculated as Actual value - predicted value.

Now, Suppose you predicted the co2 emission as 150 for some values of parameter m1, m2, m3... but the actual value of the co2 emission is 200.

Then the error will be 200-150 = 50.

We again need to find some other values of weights or parameters so that the next time the error will less. We will repeat till we got the error as 0.

Python Library For Multiple Linear Regression

Now, we don't need to implement the algorithm, python has made our lives easy and we will use a pre-built python library to find built our model.

we will use the sklearn library which contains linear_model

Multiple Regression Model Development

Now, Let's select some independent features by observing their linearity with respect to co2 emissions.

import matplotlib.pyplot as pltCYLINDERS

plt.scatter(data["CYLINDERS"], data["CO2EMISSIONS"])

FUELCONSUMPTION_CITY

plt.scatter(data["FUELCONSUMPTION_CITY"],data["CO2EMISSIONS"])

FUELCONSUMPTION_HWY

plt.scatter(data["FUELCONSUMPTION_HWY"], data["CO2EMISSIONS"])

FUELCONSUMPTION_COMB

plt.scatter(data["FUELCONSUMPTION_COMB"], data["CO2EMISSIONS"])Now, You can observe that these features show a linear relationship with co2 emissions, so we will select these features as independent variables.

independent_variables = np.asanyarray(data[["ENGINESIZE","CYLINDERS","FUELCONSUMPTION_CITY","FUELCONSUMPTION_HWY","FUELCONSUMPTION_COMB"]]) dependent_variable = np.asanyarray(data["CO2EMISSIONS"])

train_test_split

We will split our data-set for the training set and test-set. train-set is used for model development and the test set is used for model evaluation.

Python gives us the prebuilt tools to split our data-set.

from sklearn.model_selection import train_test_split x_train, x_test, y_train, y_test = train_test_split(independent_variables, dependent_variable, test_size=0.33)The above code split our data-set as a 33% test-set and remaining as train. Now, Let's import the linear_regression model from sklearn

from sklearn import linear_model regression_model = linear_model.LinearRegression() regression_model.fit(x_train,y_train)

LinearRegression(copy_X=True, fit_intercept=True, n_jobs=None, normalize=False)let's observe the weights or the coefficients

regression_model.coef_

array([11.66122879, 7.2624812 , -3.55625977, -2.83771164, 15.67117827])

Model evaluation

For multiple linear regression models, we have a term called variance regression score to evaluate our model.

The Variance Regression Score is,

If 𝑦̂ is the estimated target output, y the corresponding (correct) target output, and Var is Variance, the square of the standard deviation, then the explained variance is estimated as follow:

𝚎𝚡𝚙𝚕𝚊𝚒𝚗𝚎𝚍𝚅𝚊𝚛𝚒𝚊𝚗𝚌𝚎(𝑦,𝑦̂ )=1−𝑉𝑎𝑟{𝑦−𝑦̂ }𝑉𝑎𝑟{𝑦}

We will now predict the values of co2Emission using the input as the test-set.

c02_prediction = regression_model.predict(x_test)co2_prediction contains all the predicted values for each x_test. Let's observe the error in our model.

The mean absolute error = mean(|Actual value - predicted value.|)

The mean squared error is = mean((Actual value - predicted value)**2)

In our case Actual value is y_test.

And the predicted value is co2_prediction.

MAE = np.mean(np.absolute(y_test - c02_prediction)) # mean absolute error. MSE = np.mean((y_test - c02_prediction)**2) # mean square error.Output

MAE

17.948083587765744

MSE

576.5233784396162Let's calculate variance score:

regression_model.score(x_test,y_test)

0.8700007816166958The best value is 1 and the lower values can be worse.

Social Plugin